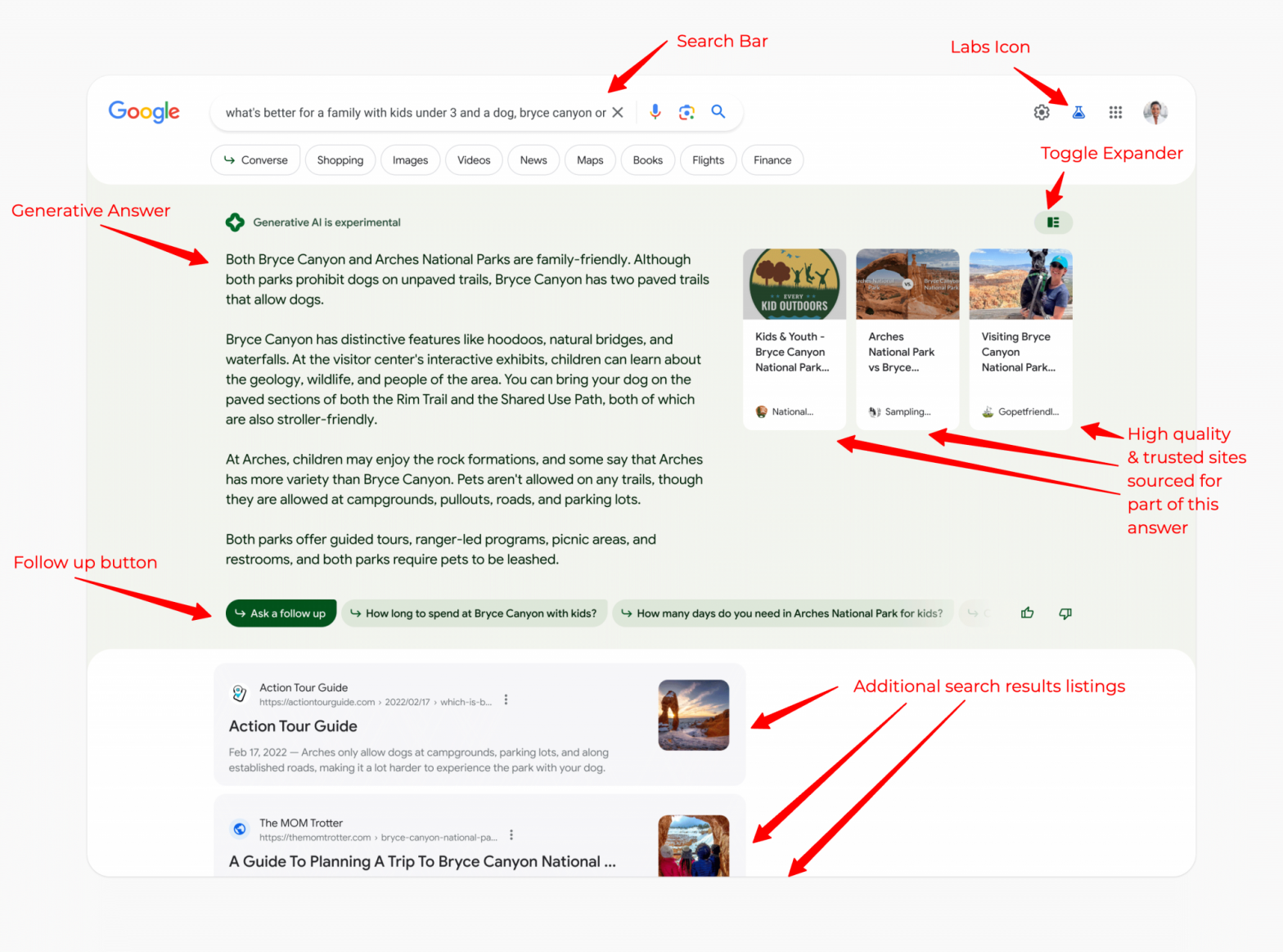

Everyone is talking about the new generative AI search engine interface Google is about to release. Obviously, Google has designed that interface to provide a youthful experience to users. Also, the User Interface makes efforts to generate clicks from the new search results so it pays content creators, publishers, site owners, and SEOs.

How Google planned to make this work is that they will generate an answer for some queries, not all, and they will show this animation of Google working up an answer. Google will also show some of the more trusted and high-quality websites as clickable links, with an image, title and URL, right in the answer box. These shown sites will make up part of the answer and are sites Google has a high degree of trust in, enough to show them in this answer box. [see image featured.] There is also a toggle expander button to dive deeper into the answers and/or ask follow-up questions below. Or scroll past it to see the more traditional search result listings.

Google made this work by using a bunch of AI, LLMs, and machine learning, including many exciting search algorithms to function. This new experience is powered by a variety of large language models, including an advanced version of MUM and PaLM2. This model is different from what Bard uses, it was trained to carry out tasks specific to search, including ways to identify high-quality sites that corroborate the information presented in the output. And of course, it uses Google Search’s core ranking systems for this purpose. Google said this helps them “significantly mitigate: some of the known limitations of LLMs, like hallucination or inaccuracies.

On top of that, Google also deploys its Search Quality Raters to evaluate these results and takes that feedback to improve the models going forward. Google also conducts adversarial testing of these systems to identify areas where the systems aren’t performing as intended, the company told me. YMYL comes into play too, and if Google does show a response here, Google will add a disclaimer to YMYL categories of responses. “On health-related queries where we do show a response, the disclaimer emphasizes that people should not rely on the information for medical advice, and they should work with medical professionals for individualized care,” Google said.

And Google might not give generative answers when there is a lack of quality or reliable information, such as with “data voids” or “information gaps.” And Google won’t respond to explicit or dangerous topics. Google applies the same policies it uses for featured snippets and autocompletes, as well as its other Search content policies. Google rather not respond in a fluid tone because searchers are more likely to trust fluidity, Google told us. “We have found that giving the models leeway to create fluid, human-sounding responses results in a higher likelihood of inaccuracies (see limitations below) in the output. At the same time, when responses are fluid and conversational in nature, we have found that human evaluators are more likely to trust the responses and less likely to catch errors,” Google told us.

Bottom line is that the experience will be massive, maybe not, but whatever is comes out to become. We should only know that AI must not be feared, but continuously learned. It’s not taking your job.